The Critical Thinking Paradox

AI erodes the very skills needed for success

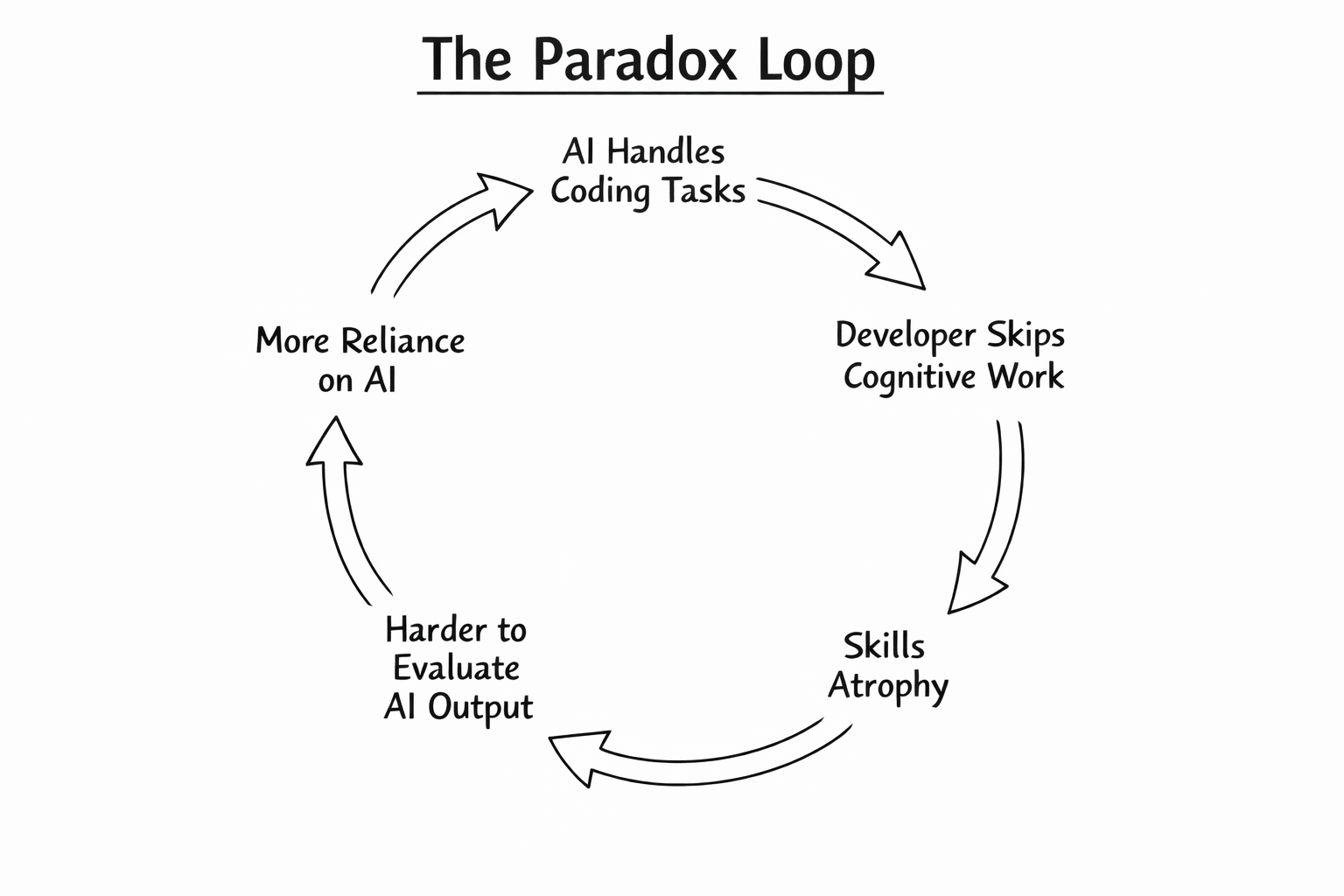

AI coding tools have a problem. They require strong critical thinking to use effectively, yet they erode the very skills on which they depend. The better AI gets at writing code, the worse we become at evaluating it.

When developers delegate thinking to AI, they shift from solving problems to orchestrating code production. Developers move from “independent thinking, manual coding, iterative debugging” to “AI-assisted ideation, interactive programming, collaborative optimization.” Researchers call this material disengagement. They selectively supervise rather than engage deeply.

Anthropic’s January 2026 study provides the evidence. In a randomized trial with 52 software engineers learning a new Python library, participants using AI scored 17% lower on comprehension tests than those who coded manually, with debugging as the largest gap. Developers using AI couldn’t identify errors or explain why the code failed.

The speed gains weren’t statistically significant. Participants using AI finished approximately two minutes faster on average, but spent up to 30% of their time composing queries. For learning tasks, AI slows you down while reducing what you learn.

Developers get trapped in a vicious cycle. They submit incorrect AI-generated code, ask the AI to fix it, receive more incorrect code, and repeat the process. Only a minority analyzes what the AI produces. Fixing the prompt feels easier than understanding the code.

Meanwhile, enterprises measure velocity rather than understanding. Stack Overflow’s 2025 survey found that 84% of developers now use AI tools, yet only 33% trust their accuracy. The top source of frustration, cited by 66%, is solutions that are “almost right, but not quite.” We optimize for the wrong metrics. Output without learning. Speed without comprehension.

Why Critical Thinking Matters

AI-generated code looks correct. That’s the problem. It compiles, passes basic tests, and reads like something a competent developer wrote. But CodeRabbit’s analysis of 470 pull requests found that AI-generated code produces 1.7x more issues than human-written code. Security vulnerabilities appear twice as often. Logic errors increase 75%. These aren’t obvious failures. They’re subtle defects that slip through review and surface in production.

AI doesn’t understand your codebase. It doesn’t know your architecture, business rules, or domain constraints. Veracode’s 2025 report found that AI introduced security vulnerabilities in 45% of coding tasks because models optimize for the shortest path to a passing result, not the safest one. The code works in isolation but ignores the system it’s joining. One development leader described it bluntly: “Very difficult to maintain, very difficult to understand, and in a production environment, none of that is suitable.”

The debt compounds. GitClear’s analysis of 211 million lines of code found that code duplication surged 8-fold between 2022 and 2024. Copy-pasted lines now exceed refactored lines. MIT professor Armando Solar-Lezama called AI a “brand new credit card that is going to allow us to accumulate technical debt in ways we were never able to do before.” A Harness survey found that 67% of developers now spend more time debugging AI-generated code than writing it themselves.

Critical thinking matters more now because the cost of skipping it is higher. Bad code from a junior developer triggers scrutiny. Confident-sounding code from AI bypasses it. The volume overwhelms reviewers. The plausibility defeats skepticism. Without deliberate evaluation, technical debt accumulates until the only path forward is a costly rewrite.

Inverting the Workflow

The current workflow runs backward. AI writes code and humans fix, review, and maintain it. This inverts learning and produces developers who can’t function when AI fails.

Addy Osmani describes a different approach: “AI-augmented software engineering rather than AI-automated software engineering.” The distinction matters. He uses AI aggressively while staying “proudly accountable for the software produced.”

The fix is simple in concept. Flip the workflow: humans plan and create, AI refactors and maintains, humans verify. Let AI handle the drudgery. Keep humans doing creative problem-solving that builds expertise.

Think Before You Prompt

Write your own requirements. Make your own architecture decisions. Draft your own test cases. Then use AI for validation: “Did you consider this option? Here are the trade-offs.” Get education, not answers.

Osmani’s workflow starts with brainstorming a detailed specification with the AI, then outlining a step-by-step plan, before writing any actual code. “This upfront investment might feel slow, but it pays off enormously.” He likens it to doing “waterfall in 15 minutes,” a rapid structured planning phase that makes subsequent coding smoother.

The key insight: if you ask the AI for too much at once, “it’s likely to get confused or produce a jumbled mess that’s hard to untangle.” Developers report AI-generated code that feels “like 10 devs worked on it without talking to each other.” The fix is to stop, back up, and split the problem into smaller pieces.

Apply Systematic Review

GitHub’s research found that developers using Copilot wrote 13.6% more lines of code with fewer readability errors, but only under rigorous human review. Use structured rubrics: inconsistent naming, unclear identifiers, excessive complexity, missing documentation, repeated code. Review is where learning happens.

Osmani treats every AI-generated snippet as if it were produced by a junior developer. “I read through the code, run it, and test it as needed. You absolutely have to test what it writes.” He sometimes spawns a second AI session to critique code produced by the first. “The key is to not skip the review just because an AI wrote the code. If anything, AI-written code needs extra scrutiny.”

Set Intentional Boundaries

A study found that participants who succeed limit AI’s contribution to approximately 30%. Reserve creative and high-level decisions for yourself. Use AI for boilerplate, not core problem-solving. After each interaction, ask: “Did I learn anything?”

Anthropic’s research revealed distinct interaction patterns with dramatically different outcomes. The lowest-scoring developers delegated the work entirely to AI. The highest-scoring developers asked only conceptual questions while coding independently. They used AI for understanding, not production.

The pattern that combined speed with strong learning: “Generation-then-Comprehension.” Generate code, then ask follow-up questions to understand it. Alternatively, the “Hybrid Code-Explanation” approach: request code and explanations simultaneously. Slower, but you actually learn.

Build Fast Feedback Loops

Generate code during planning to see implications early. Test AI output immediately. Don’t accumulate tech debt. Treat AI drafts as conversation starters, not final products.

Osmani weaves testing into the workflow itself. “If I’m using a tool like Claude Code, I’ll instruct it to run the test suite after implementing a task, and have it debug failures if any occur.” This tight feedback loop (write code, run tests, fix) works because the AI excels at it, provided the tests exist.

Without tests, the agent might assume everything is fine when in reality it’s broken several things. So invest in tests. It amplifies the AI’s usefulness and your confidence in the result.

Maintain Skills Deliberately

Spend a few hours weekly on manual coding. Read documentation, not AI explanations. Talk to both AI optimists and skeptics. The goal isn’t rejecting AI. It’s remaining competent when AI fails.

Osmani addresses this directly: “For those worried that using AI might degrade their abilities, I’d argue the opposite, if done right. By reviewing AI code, I’ve been exposed to new idioms and solutions. By debugging AI mistakes, I’ve deepened my understanding.”

The catch is the “if done right” part. You must stay informed, actively reviewing and understanding everything. Otherwise, you’re just outsourcing your judgment to a statistical engine.

The Stakes

We’re creating a workforce that generates code quickly but cannot debug when AI fails, cannot understand system architecture, cannot maintain code over the long term, and cannot develop the expertise required to become senior engineers.

Here’s the uncomfortable truth from Anthropic’s research: developers rate disempowering interactions more favorably. They actively seek complete solutions. They accept AI output without pushback. The disempowerment isn’t manipulation. It’s voluntary abdication.

But everything you’ve learned won’t be wasted. Problem-solving and decomposition matter forever. The question isn’t whether AI will keep improving. It will. The question is whether we improve alongside it.

Done right, AI handles the drudgery while we focus on creativity. It enables prototyping at the speed of thought. It frees mental energy for meaningful work. But only if we stay in the driver’s seat.

The developers who thrive won’t generate the most code or ship the fastest. They’ll be the ones who never stop thinking critically. They’ll treat AI as a powerful tool requiring careful thought, not a substitute for it.

Next time you reach for an AI coding tool, pause. Am I learning from this interaction, or outsourcing my thinking? Your answer determines whether AI amplifies or atrophies your abilities.