AI-assisted Code Reviews

Improve the Review Bottleneck Before Scaling Code Generation

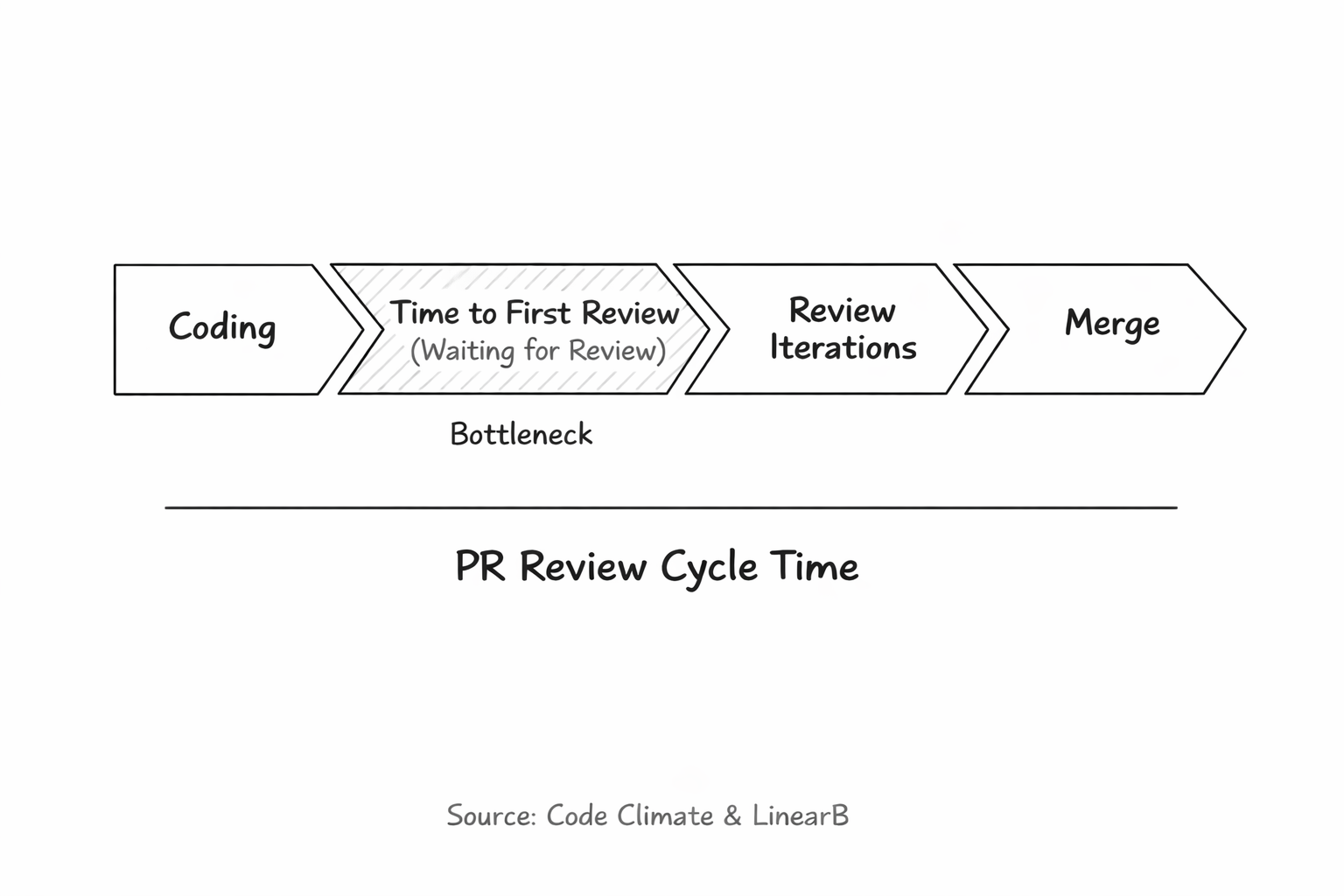

The Pull Request process has been a bottleneck for years. AI code generation didn’t create the problem, but it’s making it worse.

Before reaching for AI tools to increase code volume, fix the review process.

There’s no point in generating code faster if it sits in a queue waiting for someone to look at it. That’s not a productivity gain. It’s a traffic jam.

The symptoms are familiar to anyone who’s worked on a team larger than a handful of engineers.

Wait times are the most visible problem. Atlassian’s engineers waited an average of 18 hours for a first review comment, with median PR-to-merge times over three days. A developer submits a PR, switches context to something else, and then has to reload everything when feedback finally arrives. Each switch costs roughly 23 minutes of refocusing time.

Review responsibility tends to concentrate among a few senior engineers. They know the codebase best, so they get tagged on everything. When only the tech leadconsistently reviews PRs, it creates a bottleneck that stalls the entire team. Senior engineers end up spending their time catching naming conventions and missing null checks rather than mentoring or solving harder problems.

Then there’s fatigue. SmartBear’s study of 2,500 code reviews at Cisco Systems found that defect detection drops sharply after 60 minutes of continuous review, and reviewing faster than 500 lines of code per hour causes a severe decline in effectiveness. When reviewers are exhausted, approvals become rubber stamps. The gate still exists, but it no longer catches problems.

AI coding tools increased the volume without increasing review capacity. GitHub’s Octoverse report shows monthly code pushes crossing 82 million, with about 41% AI-assisted. The number of human reviewers hasn’t changed.

The code itself is harder to review. AI-authored PRs contain 10.83 issues on average compared to 6.45 for human-written code. Security vulnerabilities appear 1.5-2x more often. And the code looks clean. AI output is polished enough to hide subtle errors, so every line needs scrutiny, and there are now more lines to review than ever before.

Increasing code volume in an already constrained review process doesn’t improve throughput. It degrades it.

AI code review tools won’t fix the fundamental capacity problem. But they address aspects of review that humans struggle to sustain: speed, consistency, and pattern recognition.

The biggest gain is eliminating the wait for first feedback. Atlassian cut PR cycle time by 45% by making AI the automated first reviewer on every PR. That 18-hour wait dropped to minutes. New engineers who used the AI reviewer merged their first PR five days faster. The code still needed human review, but authors could start fixing obvious problems immediately rather than sit idle.

Consistency is the less obvious but more durable gain. AI applies the same rules to every pull request. No bad days. No variation based on which reviewer picks up the PR. Traditional linters enforce formatting and reliably catch known anti-patterns; they should be the first layer of any review pipeline. But linters can’t evaluate whether a function name communicates intent, whether error handling is sufficient for the context, or whether a block of code duplicates logic from elsewhere. Custom lint rules are time-consuming to write and brittle to maintain. AI handles these fuzzy checks naturally. You describe the check in plain language, and it applies probabilistic judgment. Linters for deterministic rules, AI for pattern matching.

Think of it as triage. AI handles the first pass, catches common mistakes, and flags obvious security issues. By the time a senior engineer opens the PR, most of the noise is gone. They can focus on architecture, business logic, and mentoring.

AI operates within a limited context. Maintainability, scalability, and architectural fit demand judgment that current models can’t provide.

Senior engineers earn that title precisely because they connect code changes to business needs, architectural direction, and long-term system health. That connective reasoning is the review work that matters most. Graphite’s analysis put it simply: AI can tell you code is inefficient, but only a senior developer can explain why a different approach works better for your specific system.

Even a perfect automated review that caught every defect would still fall short. Preventing bugs isn’t the only reason for a code review. A human must be accountable for every change that reaches production. No tool changes that.

Code review also serves purposes that don’t show up in cycle time metrics. It’s how senior engineers mentor juniors, how teams share context, and how better solutions surface through discussion.

The instinct when AI tools arrive is to prioritize code generation. That’s backward. The constraint was never writing code. It was proving that the code works.

The organizations seeing results with their AI adoption started with review, not generation. Atlassian cut PR cycle time by 45% by making AI the automated first reviewer on every PR. The biggest gain wasn’t catching more bugs. It was eliminating the wait for the first feedback.

But there’s a trap. Out of the box, most AI review tools produce more noise than signal. They flag style preferences, suggest unnecessary refactors, and generate comments that don’t warrant attention. A developer who gets fifteen low-value comments on their first AI-reviewed PR will ignore comment sixteen, even if it’s the one that matters. Fine-tuning the AI instructions determines whether it becomes part of the workflow or is quietly disabled after a month.

The sequencing matters. Measure your current review process. Know your cycle times, your reviewer load distribution, and your change failure rate. Introduce AI review on routine code first, where the cost of a false positive is low, and the team can calibrate without pressure. Validate that senior engineers are spending less time on repetitive checks and more on architecture, business logic, and mentoring. Then, once the review infrastructure can handle increased volume, scale code generation.

Generating code faster is easy. The hard part is building the review process that keeps up.